PhD Candidate | Augmented Reality Researcher | AR Game Developer

LinkedIn | Website

About Me

I am a PhD candidate at the University of Canterbury, specializing in Location-Based Augmented Reality (AR) games. With over 10 years of experience in software development, I have worked on immersive AR applications, technical documentation, and educational content creation. My current research focuses on designing and evaluating AR multiplayer games to connect people and places remotely through playful interactions.

Read More: https://www.hitlabnz.org/index.php/project/location-based-ar-game-2/

Augmented Reality Project Highlights

AR Hide-and-Seek Game - Remote Gameplay with Niantic LiDAR Scanning and Splats

Platform: Niantic Lightship ARDK, Scaniverse and Unity 3D

Description

This is a location-based multiplayer hide-and-seek game that leverages augmented reality (AR) to connect players across remote locations. The Hider uses Niantic’s Lightship ARDK to place virtual objects in a scanned physical space, which the Seeker must find using real-time feedback mechanisms, such as particle effects and vibrations. The game features advanced spatial mapping and object manipulation via Niantic’s VPS (Visual Positioning System) and integrates Scanniverse to capture accurate 3D models of the playing environment. The real-time interaction and remote collaboration in this game offer a novel AR experience, enhancing traditional hide-and-seek gameplay by introducing dynamic, interactive elements in a virtual overlay of the real world.

Key Features:

- Dynamic object manipulation: Hiders can alter the size, position, and even rotation of hidden objects in real-time, challenging Seekers to navigate more complex spatial environments.

- VPS-enhanced precision: The game utilizes Niantic’s VPS for exact mapping of the playing space, creating an immersive environment where hidden objects align with real-world coordinates.

- Real-time AR feedback for Seekers: Seekers receive visual particle effects and device vibrations to guide their search, adding to the sense of immersion and challenge.

- Multiplayer support: Players from remote locations can interact in real-time, using live voice communication and shared AR interfaces to enhance the feeling of connection and collaboration.

- Innovative spatial decision-making: Players must adapt to both real and virtual spaces, developing new strategies for hiding and seeking across mixed-reality environments.

Next Step: Migration of Niantic Lightship ARDK Project to Niantic Studio

As part of my ongoing work with Niantic Lightship ARDK for the AR Hide-and-Seek Game, I am excited to explore the potential of migrating the project to Niantic Studio. With the platform’s newly launched features, such as the real-time visual interface and dynamic 3D environment manipulation, Niantic Studio presents an excellent opportunity to enhance the core gameplay mechanics of my project. Leveraging tools like Gaussian splats, which allow for high-fidelity 3D scanning of real-world locations, can take the immersive AR experience of the game to the next level.

The Visual Positioning System (VPS) in Niantic Studio would be instrumental in ensuring accurate mapping of the game’s physical and virtual environments. This will enable real-time feedback for Seekers and allow Hiders to place objects dynamically in more complex, interactive settings. Furthermore, the cross-platform capabilities of Niantic Studio will enable seamless deployment on both mobile devices and XR headsets, making the game accessible to a wider audience.

By integrating my existing Niantic Lightship ARDK work with Niantic Studio’s innovative tools, I plan to create a more interactive, immersive, and accessible version of the hide-and-seek game, suitable for various devices and geographies.

Connecting Remote Places and Players with a Location-Based Augmented Reality Game Design

This original project explores how remote physical locations can be connected through immersive, AR-based gaming experiences. In this game design, two players—situated in different locations—are engaged in a collaborative, real-time interaction. One player shares their location, while the other collects resources from their physical environment to help the first player defend against virtual enemies.

The core goal is to foster a sense of spatial presence and immersion by enabling players to share their environments seamlessly through three distinct AR game modes: tabletop, overlay, and window. These modes are designed to create a shared sense of connection, allowing players to experience a remote location as if it were part of their own space. The project uses Niantic's VPS and LiDAR technology for 3D scanning and remote interaction, blending digital objects with real-world environments in a meaningful way.

Key Features:

- Three distinct AR modes: Tabletop, overlay, and window modes for remote gameplay, each offering unique interaction experiences.

- Resource collection mechanic: Players collect real-world resources to share across locations, promoting physical activity and collaboration.

- Spatial presence and immersion: Niantic's real-time meshing and VPS ensure accurate alignment of virtual objects in real-world spaces.

- Real-time multiplayer support: Connecting players in different locations with minimal latency for seamless interactions.

- Integration of Niantic's 3D scanning framework: For enhanced representation and interactivity between remote physical locations.

We have published our work and are currently conducting a follow-up user study.

Sri Wickramasinghe Y., Lukosch S., and Lukosch H. (2023) Designing Immersive Multiplayer Location-Based Augmented Reality Games with Remotely Shared Spaces. In Becu N (Eds). Simulation and Gaming for Social and Environmental Transitions.: Proceedings of the 54th Conference of the International Simulation and Gaming Association: 204-214. https://shs.hal.science/halshs-04209935

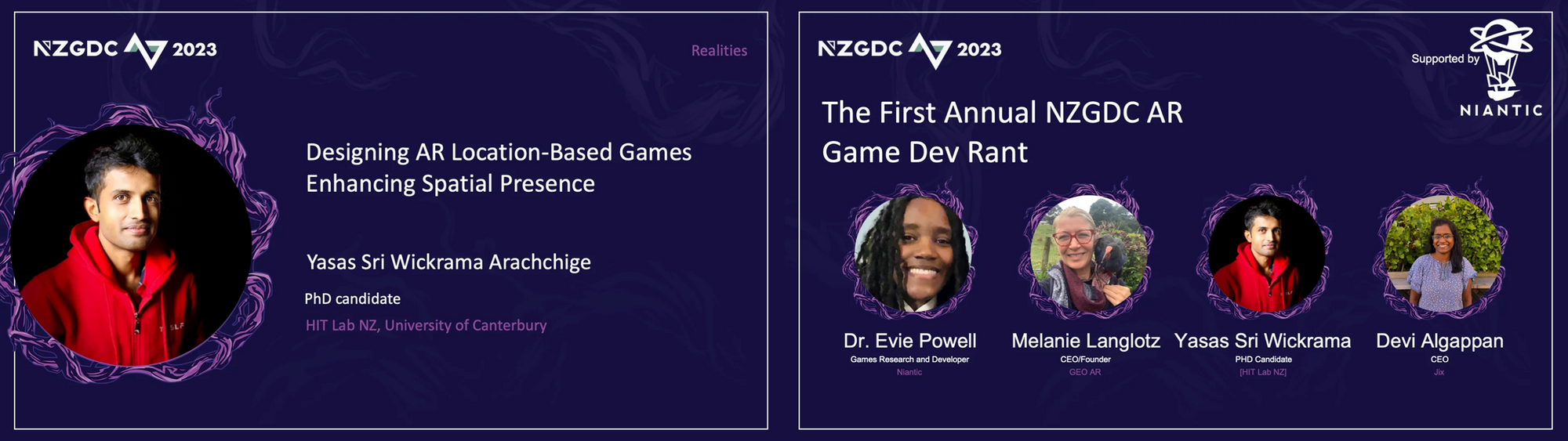

Public Speaking Experience at International Game Developers Conferences and Events

I have been a public speaker at various international conferences for over five years, presenting my research on augmented reality (AR) and location-based games. One of the highlights of my speaking career includes multiple appearances at the New Zealand Game Developers Conference (NZGDC), a prominent annual event in the game development community.

Most notably, I was part of the first-ever Augmented Reality Developers' Rant at NZGDC, where I shared insights on AR design and game development alongside esteemed professionals like Dr. Evie Powell from Niantic and Melanie Langlotz from GEO AR. In this session, I discussed my PhD research on location-based augmented reality games, focusing on enhancing spatial presence and creating immersive multiplayer experiences.

Additionally, I have conducted hands-on workshops at NZGDC, helping developers and enthusiasts alike to build their own AR experiences using cutting-edge technologies like Niantic Lightship ARDK.

Through these events, I’ve had the opportunity to not only present my findings but also to engage with a community of innovators who are pushing the boundaries of AR and game development.

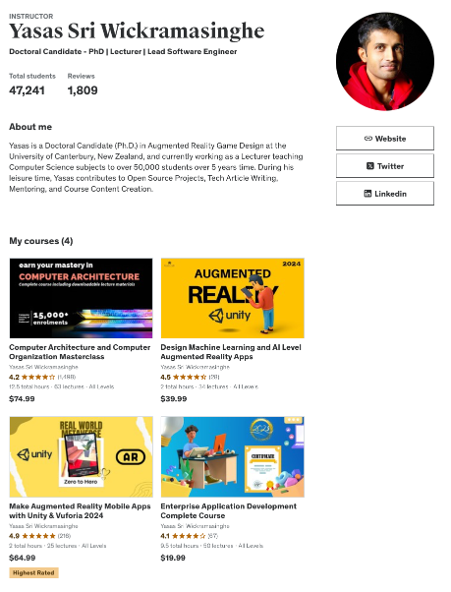

Udemy Instructor with 45,000+ Students

I am an Udemy instructor with over 45,000 students globally, specializing in educational content related to Augmented Reality (AR). My courses are designed to help both beginners and advanced learners navigate the rapidly growing field of AR development. One of my flagship courses focuses on using Niantic Lightship ARDK, where I provide hands-on tutorials on building location-based AR applications, covering essential topics like AR object placement, multiplayer AR experiences, and integrating real-world data.

Moving forward, I’m excited to expand my portfolio with a new course centered around Niantic Studio. This upcoming course will dive into the powerful new tools offered by Niantic Studio, helping developers build immersive 3D web experiences and WebXR games with features such as real-time object manipulation, Visual Positioning System (VPS)integration, and seamless cross-platform deployment.

Course Highlights:

- Comprehensive walkthroughs for Niantic Lightship ARDK development.

- New course on Niantic Studio, exploring 3D AR interactions, resource collection, and real-time environment mapping.

- Practical tutorials aimed at both AR beginners and experienced developers.

You can check out my current courses and stay tuned for my latest release on Niantic Studio via my Udemy InstructorProfile.